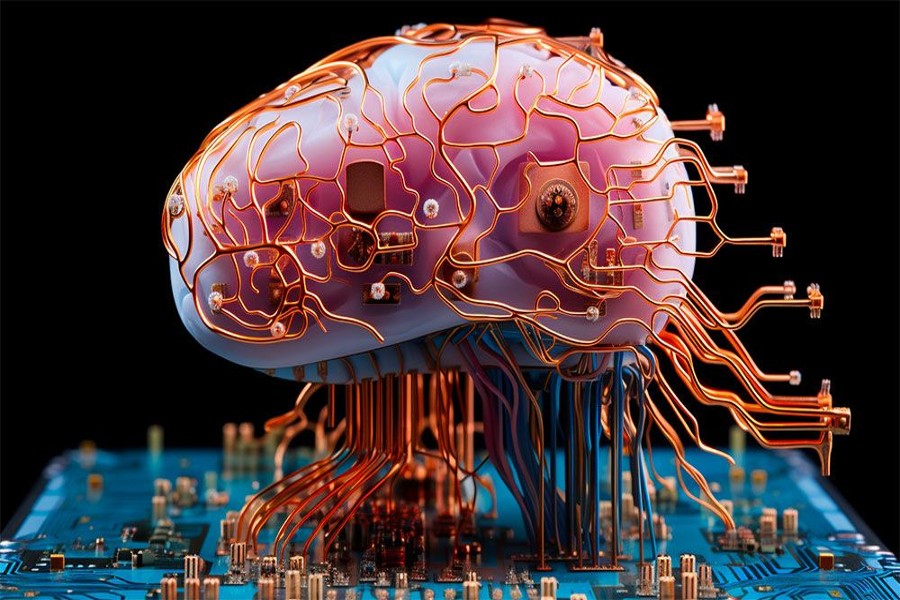

Shaping The Future of Brain Computer Interfacing with Physiological Signals

Brain Computer Interface (BCI) / Brain Machine Interface is an emerging technology that creates communication between the human brain and a computer. The electroencephalography (EEG) signals are collected from the human brain, analyzed and translated into actions or commands to be performed by the output devices. BCI systems utilize the signals generated from the central nervous system as part of the human brain. After a series of training, the brain signals generated from the end user encode intention. Similarly, BCI, also after training, interprets the signals and translates them into commands to an output device that attains the user’s intention. Thus, both the end user and BCI work together to attain the goals of the end user.

The most common applications of BCI include neuro rehabilitation, assistive technology, cognitive neuroscience and gaming and entertainment. BCI is considered to be one of the fast-growing technologies that paves the way for exciting innovations in the field of health care and human computer interaction (HCI).

Enhanced Signal Acquisition

Numerous research works are being carried out in the field of BCI with EEG signals. It is identified that in addition to EEG, signals such as EoG, ECG, EMG can be utilized for advancements in HCI systems.

EEG records the electrical activities by placing electrodes on the scalp. In a similar manner, EoG records eye movements, ECG records the heart rate and EMG tracks the activities of muscles Electrooculography (EoG) signals are recorded from the eye movements with respect to the electrical potential of cornea and retina, covering the positive anterior aspect and negative posterior aspect of the eye movements.

The integration of EoG in BCI paves way for numerous applications such as driver drowsiness detection, gaze based interaction, virtual reality and augmented reality based sports and entertainment. In a similar manner, ECG and EMG signals can also be incorporated with BCI, thus improving the efficiency and versatility of these systems.

Applications such as biometric authentication, gesture recognition, prosthetic control and emotion recognition are feasible by infusing EEG and EMG signals. By encompassing multiple physiological signals, BCI systems can identify the more comprehensive picture of the user’s cognitive and physical state, thereby driving towards multi-modal fusion. Also customized applications can be created based on the user’s unique physiological responses, stimulating inclusivity and convenience in human-computer interaction.

Machine Learning Powers BCI

Brain computer interfaces enable users to control external devices using the mind. BCIs are assistive neuro technology that may help those with impaired speech or who are paralyzed to control external devices such as robotic limbs, operate a motorized wheelchair, and control a computer cursor. Such features can be brought forth to the next level by incorporating machine learning techniques with BCI. The recorded electrical signals may be used to predict the user’s activities. Numerous supervised and unsupervised algorithms are applicable for BCI tasks. Such Artificial intelligence (AI) based innovations will lead to the development of high-performance devices as well as enhance the living of elderly people.

ML is one of the influential disciplines of statistics and computer science that utilize computers to execute tasks without being programmed explicitly. With the advancements in technology, the ML has made huge progress in the application areas of monitoring, diagnosis, classification, and detection. ML techniques play a predominant part in data analysis in BCI systems. Since existing BCI systems are not adequate for learning, understanding, and interpreting complex brain activities, the addition of ML with BCIs paves way for the invention of remarkable applications.

Potential Applications

Emotion recognition is one of the developing research areas which has got the need for automated systems that monitor sleep, stress and different human emotions. Signals such as EEG, heart rate, skin conductance can be measured and supervised algorithms may be applied to classify the human mental state. Such applications are also well suited at hospitals to track the physical and mental wellbeing of patients.

Neuro feedback systems are also helpful for individuals to monitor stress level, sleep level and anxiety. Hands-free control of devices, smart home automation based on user states like stress levels or fatigue, and personalized user interfaces are potential applications.

Assistive technologies can be created for individuals with special needs or any disabilities. Customized interfaces based on muscle activity, eye movements, and brain signals can delegate users with improved communication and control capabilities.

Featured Image

Source: